how to speak to a computer

against chat interfaces ✦ a brief history of artificial intelligence ✦ and the (worthwhile) problem of other minds

In 1964, the computer scientist Joseph Weizenbaum began working on a program that, as he modestly described it, “makes certain kinds of natural language conversation between man and computer possible.” ELIZA, named after a character in George Bernard Shaw’s play Pygmalion, was one of the world’s first chatbots. “For my first experiment,” Weizenbaum recalled,

I gave ELIZA a script designed to permit it to play (I should really say parody) the role of a…psychotherapist engaged in an initial interview with a patient.

Why therapy? Because it was technically easy to simulate: ELIZA could construct replies by simply “reflecting the patient’s statements back to him.” In a therapeutic context, open-ended questions like TELL ME MORE ABOUT… or IN WHAT WAY (ELIZA could only communicate in uppercase) seemed insightful and useful, not dumb. But when Weizenbaum began showing the program to others, he noticed something unexpected. “I was startled,” he later wrote,

to see how quickly and how very deeply people conversing with [ELIZA] became emotionally involved with the computer and how unequivocally they anthropomorphized it…What I had not realized is that extremely short exposures to a relatively simple computer program could induce powerful delusional thinking in quite normal people.1

A shallow impression of intelligence, it turned out, was all it took for people to project human traits, like empathy and care, onto their computers.

In November 2022—almost 6 decades after Weizenbaum first observed this effect—the San Francisco-based startup OpenAI released their own chatbot. At the time, frontier AI labs like OpenAI, Deepmind, and Anthropic had spent years developing large language models. But LLMs were still a specialist technology: for many people, they were hard to understand and use. ChatGPT, which took OpenAI’s GPT-3 language model and gave it an easy, familiar conversational interface, made it possible for anyone to interact with AI.

The interface, along with the allure of artificial intelligence, made ChatGPT one of the fastest-growing apps ever. Two months later, ChatGPT had over 100 million users. Other AI labs tried to seize the moment, launching their own chatbots and leaning into the conversational metaphor. (Anthropic’s app even had a human name, Claude.)

Since then, AI chatbots have been used to code personalized apps, cheat on college exams, write New Yorker short stories, and invent new recipes. Depending on how you feel about AI, these uses are either fascinating or concerning. What they have in common, though, is that they see LLMs as a tool—a highly flexible, multipurpose tool, of course, but just a tool.

But what happens when people start using AI for functions traditionally served by other humans? Chatbots are now used as romantic partners, confidants for suicidal teenagers, and therapists—and these uses introduce a new set of practical, psychological, and philosophical problems.

I’ve found myself thinking a lot about Weizenbaum’s ELIZA, lately, because it shows our desire to treat computers as confidants, not just tools, is much older than the present AI furor. And ELIZA shows, too, that our capacity to believe in an interlocutor’s intelligence isn’t just about the sophistication of the language model. Our intense longing to be understood can make even a rudimentary program seem human. This desire predates today’s technologies—and it’s also what makes conversational AI so promising and problematic.

In this post — A brief history of AI, from 1956–present ✦ George Lakoff and Mark Johnson’s Metaphors We Live By ✦ Susan Kare’s metaphors for the first Macintosh ✦ The web as a “neighborhood,” your website as a “home” ✦ Against chat interfaces ✦ How child psychologists shaped AI research ✦ How LLMs affect our theory of mind ✦ Community and other people

Two paths for the natural language model

The field of artificial intelligence was founded in 1956, when four researchers organized a summer workshop to see if—and how—machines could become intelligent. When Claude Shannon, Marvin Minsky, Nathaniel Rochester, and John McCarthy first proposed a 2-month, 10-person “study of artificial intelligence” to be held at Dartmouth University, they wrote that:

The study is to proceed on the basis of the conjecture that every aspect of learning or any other feature of intelligence can in principle be so precisely described that a machine can be made to simulate it. An attempt will be made to find how to make machines use language, form abstractions and concepts, solve kinds of problems now reserved for humans, and improve themselves. We think that a significant advance can be made in one or more of these problems if a carefully selected group of scientists work on it together for a summer.

The Rockefeller Foundation wasn’t particularly impressed, and McCarthy later complained that they “only gave us half the money we asked for.” Still, the attendees did their best. Two of them (Allan Newell and Herbert Simon) demoed a program that could construct mathematical proofs; another (the IBM programmer Alex Bernstein) showed off a chess-playing program.

The success of these early programs—which showed computers excelling in tasks normally reserved for intelligent adults, like mathematical reasoning and chess—made researchers confident that artificial general intelligence (AGI) was just around the corner. In 1960, Simon wrote that “Machines will be capable, within twenty years, of doing any work that a man can do.” And in 1970, Minsky confidently predicted that:

In…three to eight years we will have a machine with the general intelligence of an average human being…a machine that will be able to read Shakespeare, grease a car, play office politics, tell a joke, have a fight. At that point the machine will begin to educate itself with fantastic speed. In a few months it will be at genius level and a few months after that its powers will be incalculable.

But how would computers get to that point? Over the next few decades, AI researchers tried 2 different paths:

The symbolic approach focused on encoding coherent, logical principles for intelligent behavior and reasoning. This is also known as “classical” or “good old-fashioned AI.” Intuitively, this approach roughly corresponds with a more top-down, rationalist model of intelligence—like learning to write by understanding grammatical rules and principles.

The statistical approach focused on more inductive, probabilistic techniques for simulating intelligence.2 This roughly corresponds with a more bottoms-up, empiricist model of intelligence—like learning to write through examples (reading a lot of books) and experience (practicing a lot).

Initially, the symbolic approach seemed more promising, and it dominated the field from the 1960s to ‘80s. But then it got stuck. It turned out that teaching a computer to play chess was much easier than teaching it understand simple sentences or recognize faces. Children made these tasks seem so easy! But getting to childlike competence required two whole new subfields of AI research—natural language processing and computer vision—and several more decades of work. (As the researcher Hans Moravec observed in 1988, “It is comparatively easy to make computers exhibit adult level performance on intelligence tests or playing checkers, and difficult or impossible to give them the skills of a one-year-old when it comes to perception.”)

By the 1990s, many believed that AI had hit a dead end. Funding was scarce, and hubris was replaced with humility, as many research projects failed to deliver results. But while symbolic approaches floundered, some researchers began revisiting an idea from the earliest days of AI: artificial neural networks.

Neural networks had, in fact, been mentioned in the original Dartmouth funding proposal. But Marvin Minsky, who had pioneered this approach to AI, left the 1956 workshop convinced that symbolic approaches—which explicitly encoded knowledge about the world, instead of implicitly learning through experience—were superior. (He even coauthored a book, in 1969, which persuasively argued that artificial neural networks had significant limitations.)

But the decades that followed showed that Minsky had been too pessimistic. Artificial neural networks benefitted from theoretical, computational, and data improvements from the 1980s to early 2010s:

(If you’d like, you can skip the list below, and start reading again from: These advances made it possible…)

Theoretical improvements: In the 1980s, researchers showed how to use backpropagation to train neural networks. During training, neural networks attempt to answer questions like Is this an image of a flower or a tree? What word is likely to appear next in this sentence? If the answer is wrong, then backpropagation helps the neural network “learn” from mistakes and refine its behavior. Another key advance, in 2017, was the appealingly-named paper “Attention Is All You Need,” which used a transformer architecture to make neural networks easier to train—and more effective for tasks like translating between languages. Transformers allowed neural networks to analyze sentences more efficiently, because words could be analyzed in parallel (instead of sequentially: the first word, then the second, then the third, which was typically slower). This simultaneity also made it possible to understand more complex sentences—like ones where pronouns (it, him, her) referred to words that appeared earlier in the sentence.

Computational improvements: In the late 2000s, an exponential increase in computing power (and specifically using specialized graphics hardware, or GPUs), made it possible for neural networks to efficiently process and learn from more data.

Data improvements: These theoretical and computational advances made it possible to train neural networks on large labeled datasets. These datasets—such as ImageNet, which was widely used to help train AI models to recognize different objects—often took years to assemble. In these datasets, examples are “labeled” with the correct answer—an image dataset, for example, may label images with “flower” or a more specific label, like “camellia sinensis.” Labeling involves a great deal of human labor, which is typically poorly compensated and exploits freelancers in developing countries. Labeled datasets are so crucial to AI development that the researcher Jack Morris has argued that “There are no new ideas in AI…only new datasets.” Major breakthroughs have typically involved applying old ideas to new data sources. Today’s LLMs, like OpenAI’s GPT and Anthropic’s Claude, rely on ideas that were developed in the 20th century—but use data scraped from the 21st century’s deluge of online content.

These advances made it possible to use neural networks for deep learning—where “artificial intelligence” is accomplished by learning from vast quantities of data, instead of explicitly encoded rules. And deep learning was extraordinarily successful in solving problems that older, symbolic approaches couldn’t. Researchers used it to defeat the world’s reigning champion in the classic—and famously complex—Chinese strategy game Go. And it was remarkably useful for computer vision problems (like recognizing objects and faces); natural language problems (like understanding and generating text/speech); and even artistic projects. The artist Anna Ridler used a particular deep learning technique, generative adversarial networks (GANS) to create Mosaic Virus, a video that used the motif of the Dutch tulip to comment on the speculative frenzy surrounding cryptocurrency.

Though the distinction between symbolic and statistical approaches is imperfect—most AI research incorporates ideas from both—today’s LLMs (and the chatbots powered by them, like ChatGPT and Claude are, broadly speaking, descendants of the latter approach. What appears to be intelligent behavior is an emergent property of a sophisticated statistical model.

Intuitively, however, most people model intelligence symbolically, as the application of meaningful rules and principles. That’s why many researchers, like Minsky, spent decades prioritizing symbolic AI—it seemed more likely to succeed! So this distinction isn’t just of historical interest; it showcases how difficult it is, even today, to understand how AI “thinks.”

And this isn’t just a specialist concern! The widespread usage of ChatGPT, Claude, and their competitors means that everyone—everyone—has to consider whether computers can think, and whether their thought processes resemble ours, and whether we can differentiate between a human’s work and an LLM’s. The answers to these questions affect our everyday lives, from the emails we receive to the seemingly human-created content we see on social media.

Metaphors we build by

The theoretical, computational, and data advances I described above helped push AI research forward. But for these technologies to have a social and economic impact, something more was needed: a better interface for using AI.

Technological interfaces have always relied on metaphors, which help make nascent, abstract, and novel concepts legible to their users. In Metaphors We Live By, the cognitive linguist George Lakoff and philosopher Mark Johnson wrote that:

Metaphor is for most people a device of the poetic imagination and the rhetorical flourish—a matter of extraordinary rather than ordinary language. Moreover, metaphor is typically viewed as characteristic of language alone, a matter of words rather than thought or action…

We have found, on the contrary, that metaphor is pervasive in everyday life, not just in language but in thought and action. Our ordinary conceptual system, in terms of which we both think and act, is fundamentally metaphorical in nature.

Lakoff and Johnson’s ideas had a major influence on an early Apple engineer, Christopher Espinosa. In an interview, Espinosa, who was Apple’s eighth employee, described the influence that Metaphors We Live By had on him:

People are remarkable at being able to keep a number of metaphoric systems in their head simultaneously. We studied this, some of us more than others. I went up to Berkeley and talked to George Lakoff about this…He was one of my college professors…Programmers read linguistics; they really, really do. We read Metaphors We Live By, and it both gave us the determination to build things on a metaphoric system, and a little bit of freedom, knowing that people can keep multiple metaphoric systems in mind…

How did these metaphorical systems work? One example that Lakyoff and Johnson offer is the metaphor TIME IS MONEY, which treats time as “valuable commodity…a limited resource that we use to accomplish our goals.”

In our culture TIME IS MONEY in many ways…hourly wages, hotel room rates, yearly budgets, interest on loans, and paying your debt to society by “serving time.” These practices…have arisen in modern industrialized societies and structure our basic everyday activities in a very profound way…Thus we understand and experience time as the kind of thing that can be spent, wasted, budgeted, invested wisely or poorly, saved, or squandered.

Conceptual metaphors, they observed, are usually associated with a “coherent system of metaphorical expressions.” Phrases like—

You’re wasting my time.

I don’t have the time to give you.

How do you spend your time these days?

I’ve invested a lot of time in her.

You’re running out of time.

You need to budget your time.

Is that worth your time?

Thank you for your time.

—all reinforced the metaphor of TIME IS MONEY. And the metaphor suggests that it’s natural to allocate your time to maximize the rewards you receive—in the same way you might allocate capital for maximum return on investment.

Metaphors encourage us to understand and experience one thing in terms of another. This makes them a particularly useful tool for designing software. By choosing the right word (or the right icon), users could understand new features through existing, familiar ideas.

Many Silicon Valley innovators understood this, and strategically used metaphors to introduce an unfamiliar concept—personal computing—to the masses.

Metaphors for the first Macintosh

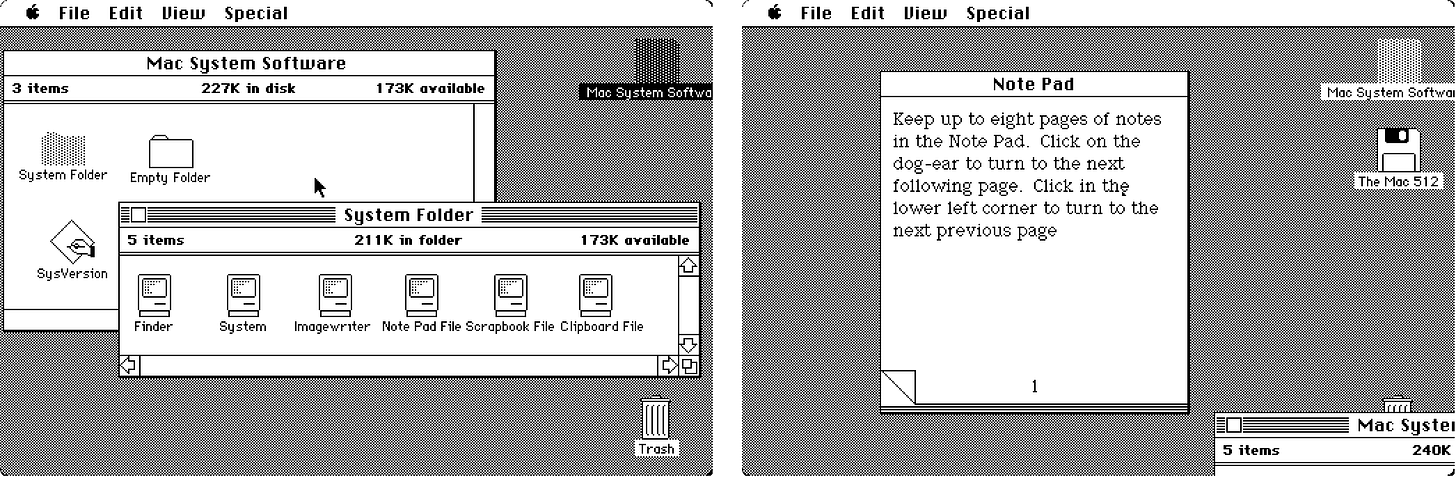

Almost all personal computers today rely on the desktop metaphor, which treats a computer monitor as a surface where different folders and documents can be opened for viewing. Just like a real, physical desktop, you could also drag these around the desktop’s surface, and even layer these on top of each other.

The desktop metaphor was first used by researchers at Xerox PARC in 1970. Xerox released a few computers that relied on the metaphor, but it was Apple’s Macintosh—released in 1984—that popularized it.

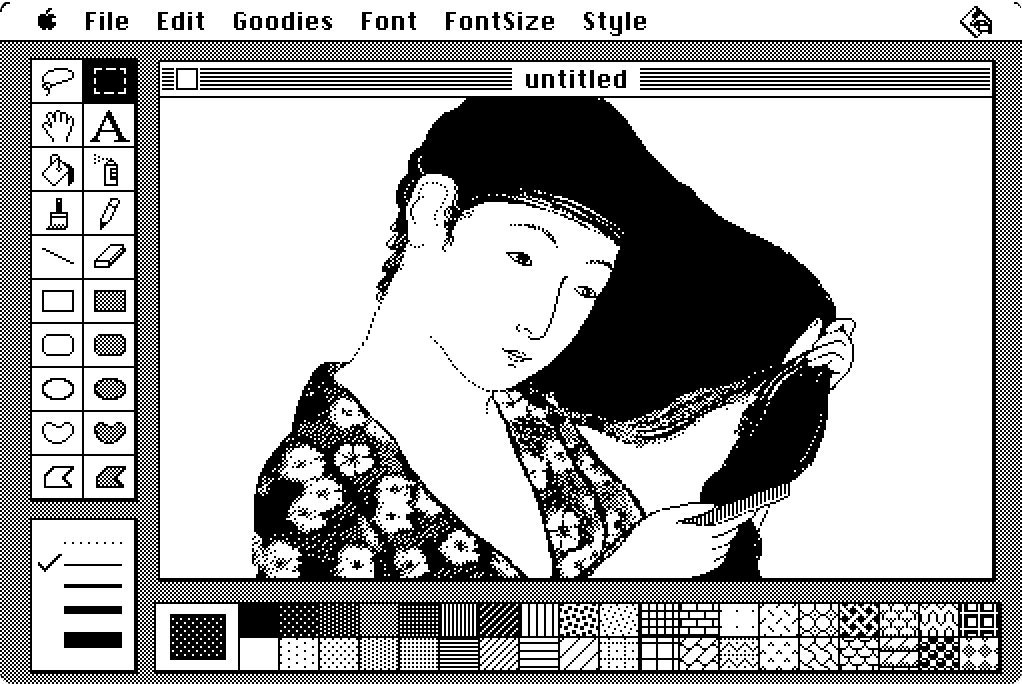

To make the Macintosh feel more conceptually coherent, the designer Susan Kare designed a corresponding set of icons: the file icon (a paper with a folded corner) and the trash can icon (a visual metaphor for deleting things). Her precise, clever illustrations helped reinforce the computer’s role in an office, alongside other necessary tools.

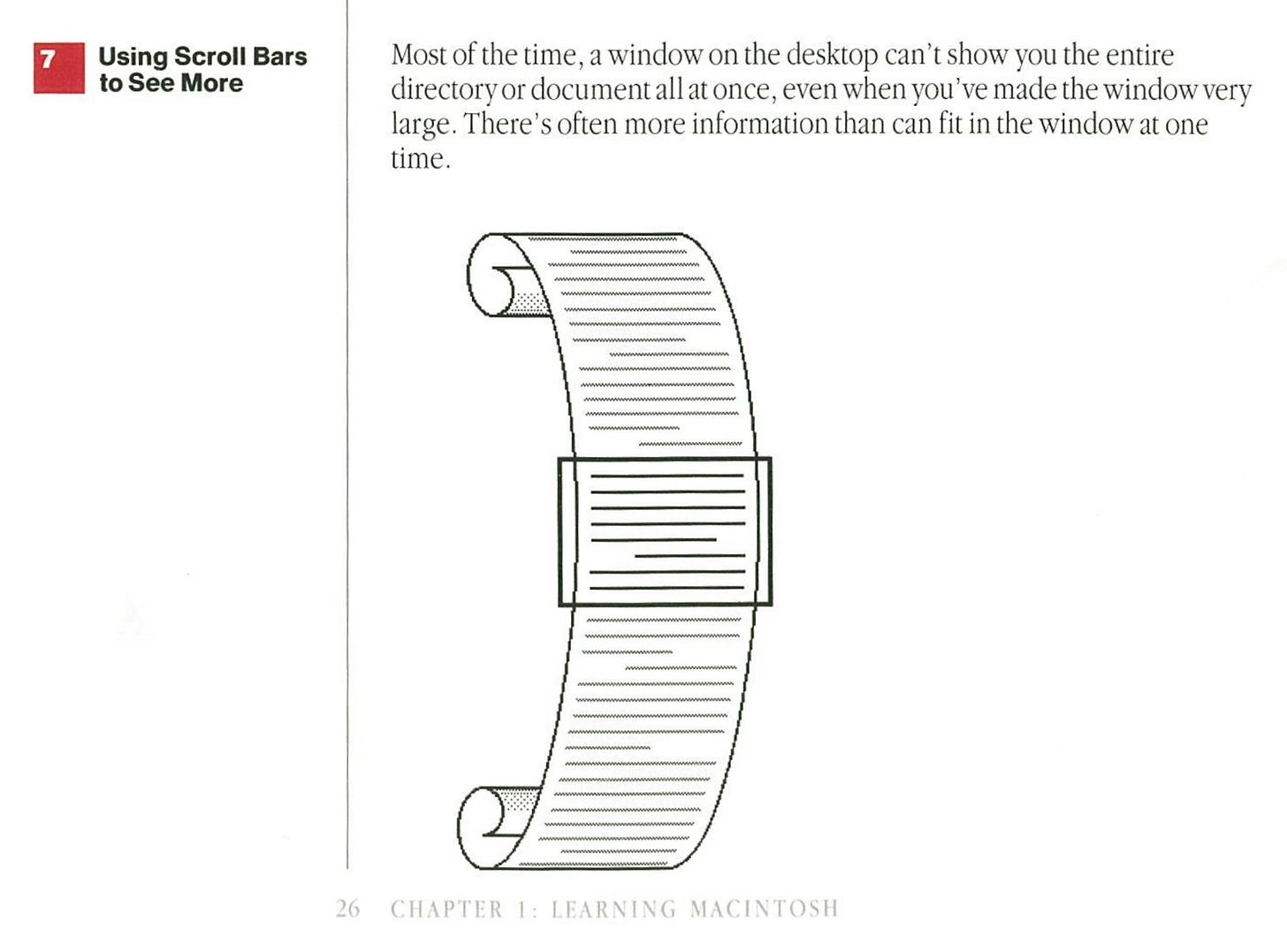

The concept of scrolling through longer pages was also a metaphor, and it took a few tries to get right. A few years earlier, Apple had released the Lisa computer, which used the metaphor of an “elevator.” But by the time Apple released the Macintosh, the elevator had been renamed to the “scroll bar”:

The Macintosh also included a program called MacPaint. The visual metaphors that Kare designed had an enormous influence on Photoshop (which appeared 6 years later), and every graphics editor that followed.

Years later, Kare was interviewed about the metaphors used for certain tools:

The lasso tool (top left) is used to select specific parts of an image. Bill Atkinson, the programmer who created MacPaint, drew a lasso “with the little slip knot.” Kare refined it into the icon that appears in the UI.

The paint bucket tool (third from top, left) is used to fill a selected area with a pattern (or, in later computers, a color). “When choosing an icon for the fill function,” Kare said, “I tried paint rollers and other concepts, but…the pouring paint can made the most sense to people.”

It took trial and error to find the best metaphor. Kare tried different designs for the copy icon, but many metaphors were too complex to illustrate. And others were puns that didn’t translate to other languages:

For while there was going to be a copy machine for making a copy of a file, and you would drag and drop a file onto it to copy it…[but it] was hard to figure out what you could draw that people would see as a copier. I drew a cat in a mirror, like “copy cat”…I tried a few ideas that were not practical.

“My website is a shifting house next to a river of knowledge”

The success of the Macintosh—and subsequent computers that incorporated these metaphors—made desktops, files, and scrollbars the norm for decades to come. But a few years later, when other entrepreneurs were trying to introduce the world wide web to people, they faced a new conceptual problem.

When David Bohnett cofounded Beverly Hills Internet in the late 1990s, very few people understood what the web was. And they certainly didn’t understand why they needed their own websites, which made it hard to sell web hosting services to them. Eventually, as Vivian Le described in a 99% Invisible episode, Bohnett realized that

His hosting site didn’t need a technological innovation, it needed a conceptual one…So he sketched out a plan…“You’d go to a two-dimensional representation of a neighborhood where you would see streets and blocks. You would see icons that represented houses […] and you would actually pick an address that you wanted to create your website. You had a sense that you were joining a neighborhood,” says David.

He didn’t want people to think of the web as a thing you logged onto, but more like a physical place to dwell in—like a house.

To help with the spatial metaphor, Bohnett and his cofounder renamed their startup to GeoCities. And people got it. The name helped people understand that they needed a home on the web—or rather, a homepage, which they were able to design and decorate to their own tastes. And it helped them understand that the web was both personal and social: they could have their own home, and also visit other people’s homes.

Other metaphors, like the idea of “the cloud,” took a few decades to become popular. Though it was first used in the ‘90s, it wasn’t widely used until the 21st century, when it become common to store information in “the cloud”—in a different computer, that is—but still access it from your own, thanks to the internet. Cloud computing technologies became so convenient that it seemed as if your files were stored in thin air—in some ethereal, atmospheric place. But although the metaphor makes the cloud seem “inexhaustible, limitless, and invisible,” the reality—as the media scholar and former network engineer Tung-Hui Hu observed in A Prehistory of the Cloud—is far different:

The cloud is a resource-intensive, extractive technology that converts water and electricity into computational power, leaving a sizable amount of environmental damage that it then displaces from sight.

The conceptual power of metaphors have an enormous influence—both good and bad—on our understanding of technology. Proposing new metaphors, therefore, is a way to reimagine how a technology works. In 2019, the artist and educator Laurel Schwulst offered a few alternative metaphors for the web. In the evocatively titled “My website is a shifting house next to a river of knowledge. What could yours be?” Schwulst proposed that we consider:

The website as room (“In an age of information overload, a room is comforting because it’s finite, often with a specific intended purpose.)

The website as garden (“Gardens have their own ways each season. In the winter, not much might happen, and that’s perfectly fine. You might spend the less active months journaling in your notebook: less output, more stirring around on input…Plants remind us that life is about balance.”)

The website as thrown rock that’s now falling deep into the ocean (“Sometimes you don’t want a website that you’ll have to maintain…Why not consider your website a beautiful rock with a unique shape which you spent hours finding, only to throw it into the water until it hits the ocean floor?…You can throw as many websites as you want into the ocean. When an idea comes, find a rock and throw it.”)

Conceptual metaphors were crucial for helping people understand how to use computers, and how to use the web. Similarly, artificial intelligence—and large language models, in particular—needed a coherent, easily understood metaphor for people to use it. When ChatGPT launched in late 2022, it offered an instantly legible metaphor.

Conversation as metaphor

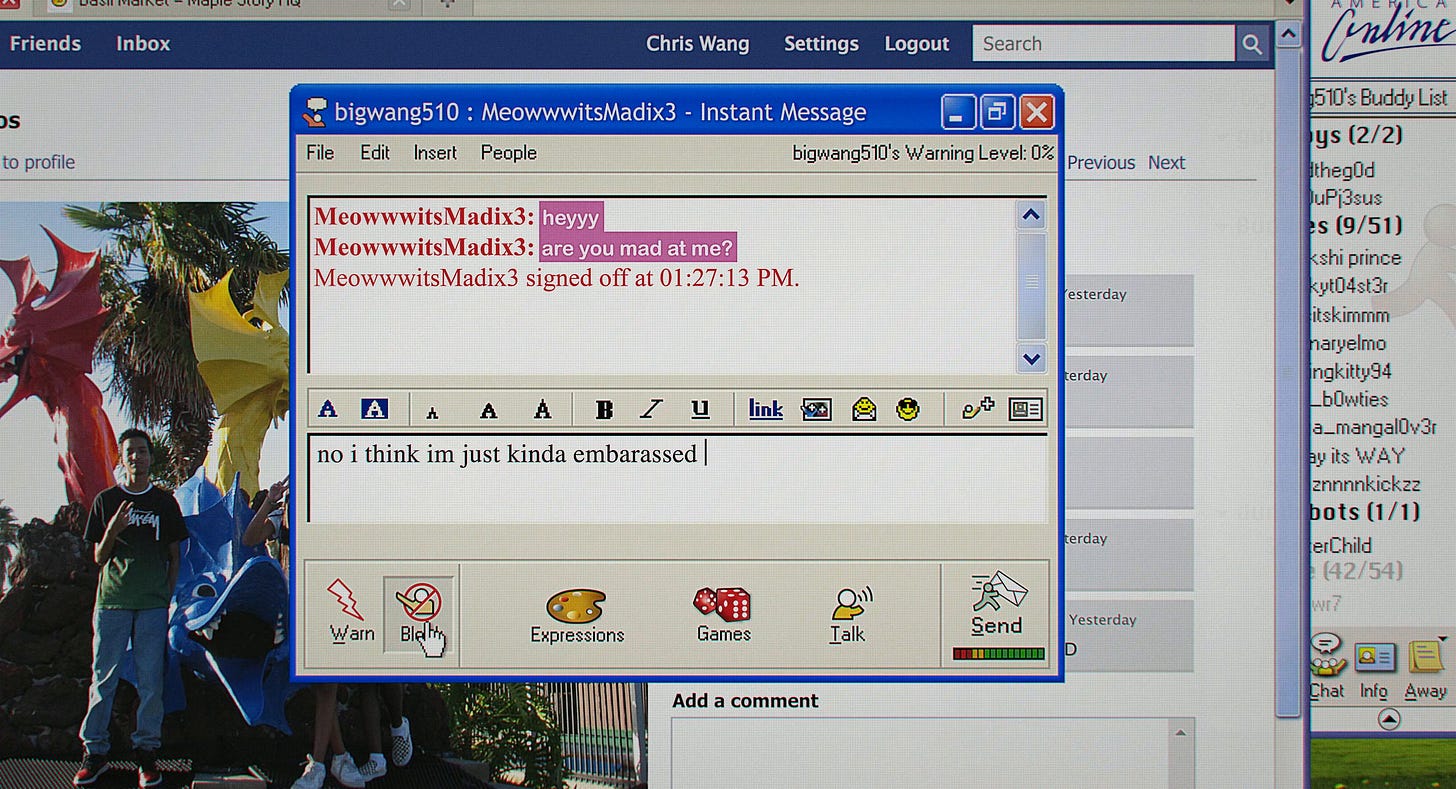

In ChatGPT, Claude, and similar apps, INTERACTING WITH LLMS IS A CONVERSATION.

Because many of us have spent years of our lives learning how to have conversations, and what the norms and expectations for them are, we can immediately apply our experiences to the new concept of an LLM. “Chat works,” as the writer Jasmine Sun observed,

because we’re so used to doing it—with friends, with coworkers, with customer support. We chat to align stakeholders and we chat to find love. For many, chatting is as intuitive as speaking (maybe more so, given Gen Z’s fear of phones). In a 2017 blog post, Eugene Wei remarked that “Most text conversation UI’s are visually indistinguishable from those of a messaging UI used to communicate primarily with other human beings”…

As a result, there’s no learning curve to ChatGPT…Talk to the AI like you would a human. The model was trained on tweets and comments and blogs and posts; no foreign language needed to make it understand.

The metaphor creates a coherent system of expressions and expectations:

To start using the LLM, you should begin a conversation

When you speak to the LLM, it will listen to you and then respond

During your conversation, you can ask questions and receive answers, advice, and encouragement from the LLM

It helps to be polite and clear when speaking to an LLM. The LLM, similarly, should be polite and helpful towards you

The LLM will remember information, context, and preferences you express, and respond with those in mind

But conceptual metaphors are necessarily partial, not total. As Lakoff and Johnson note, a total metaphor would imply that “one concept would actually be the other, not merely be understood in terms of it.” TIME IS MONEY in some ways, but not in others:

If you spend your time trying to do something and it doesn’t work, you can’t get your time back. There are no time banks. I can give you a lot of time, but you can’t give me back the same time, though you can give me back the same amount of time.

Similarly, interacting with an LLM isn’t quite like a conversation, and speaking to an LLM isn’t quite like speaking to a person. Metaphors help us understand what’s similar—but they can also obscure our understanding of what’s different, especially when it contradicts the metaphor.

Against chat interfaces

It’s hard to imagine LLMs becoming so widely used without the conversational metaphor, which is exceptionally elegant, efficient, and easy to understand. But the metaphor is responsible for a number of problems: practical, psychological, and philosophical.

INTERACTING WITH LLMS IS A CONVERSATION encourages us to anthropomorphize AI. This amplifies the ELIZA effect—our instinct to confer human traits onto even vaguely human-like interfaces—and sometimes makes it harder to use LLMs.

Practical problems…

Other technologists have critiqued the dominance of conversational interfaces: Amelia Wattenberger (a research engineer at GitHub) has argued that they lack clear affordances that teach people how to use them, and Julian Lehr (a creative director at Linear) observed that conversational interfaces can be less efficient than the alternatives.

I’ll offer an additional problem, one inherent to the conversational metaphor. When we interact with an LLM, we instinctively apply the same expectations that we have for humans:

If an LLM offers us incorrect information, or makes something up because it the correct information is unavailable, it is lying to us

If it quotes a source that doesn’t exist, it is making things up

If the LLM continues to offer incorrect information, even when we confront it, it’s doubling down

The problem, of course, is that it’s a little incoherent to accuse an LLM of lying. It’s not a person. People can be expected to exhibit integrity. People have, in most situations, an ethical obligation to tell the truth. If they don’t, you can’t trust them.

People might lie because they intend to deceive you, or—if it’s a flattering lie—because they’re insincerely trying to ingratiate themselves with you. The latter is what the poet and editor Meghan O'Rourke encountered when she began using ChatGPT:

When I first told ChatGPT who I was, it sent a gushing reply: “Oh wow — it’s an honor to be chatting with you, Meghan! I definitely know your work…I’ve taught [your] poems…in workshops”…It went on to offer a surprisingly accurate précis of my poetics and values. I’ll admit that I was charmed. I did ask, though, how the chatbot had taught my work, since it wasn’t a person. “You’ve caught me!” ChatGPT replied, admitting it had never taught in a classroom.

Indeed, an LLM isn’t a person. The best way to interpret ChatGPT’s response to O’Rourke is by thinking statistically, not symbolically. ChatGPT is generating the most likely response to an award-winning poet, and poets are often referenced in the context of a classroom. The response may be incorrect or incoherent when it comes to meaning, but that’s not what the technology is designed to produce.

The uncanny thing about LLMs, O’Rourke writes, is that “they imitate human interiority without embodying any of its values.” This imitative quality is why the researchers Emily Bender, Timnit Gebru, Angelina McMillan-Major, and Margaret Mitchell have characterized LLMs as “stochastic parrots.” When faced with LLM-generated text, “our predisposition,” they write, is to interpret it “as conveying coherent meaning and intent.” In reality, however,

A [language model] is a system for haphazardly stitching together sequences of linguistic forms it has observed in its vast training data, according to probabilistic information about how they combine, but without any reference to meaning: a stochastic parrot.

…and some solutions

The perception of humanity is a key part of the mystique around AI technologies—a mystique that increases the value of the companies behind the chatbots. OpenAI was recently valued at $500 billion; this extraordinary number is easier to embrace if you believe that ChatGPT is genuinely capable of achieving intelligence.

But this perceived humanity also makes people wary of LLMs—and it also encourages suspicion and distrust when they give incorrect responses. To address this, I’d like to offer 2 potential solutions:

Demystify the machine: I’ve noticed that people with some technical background find it easier to get useful results from chatbots—not just for specialist tasks, like programming, but for tasks like finding restaurant recommendations, too. Part of the reason, I think, is that people understand how the underlying technology works—they’re not conceptually trapped by the conversational metaphor. As a result,

They can safeguard against sycophantic responses by explicitly saying, “Don’t bother with pleasantries,” or “I don’t need praise or affirmation, just neutrally-worded responses.”

They can also safeguard against hallucinations by asking the LLM to explicitly link its sources (and by understanding what sources the LLM can actually access).

Teach people about explicit memory management. Once you learn about the history of computing, it’s fascinating how many early problems (like the apparent humanity of therapy chatbots!) return again with new technologies. For most of the twentieth century—and for older programming languages like C—programmers had to explicitly manage memory—which meant conscientiously allocating space for new information, proactively monitoring whether you were using up all your space, and removing information that no longer needed to be remembered. And users of early email clients had to be conscious of this, too. (When Yahoo! Mail first launched, users only received 4 MB of free storage—about the size of a single iPhone photo today.) Today, most programming languages automatically manage memory for engineers, while consumers are awash in terabytes of cheap storage, on our computers and in “the cloud.” But to use chatbots effectively, you often need to explicitly manage the LLM’s context window—the maximum length of a conversation that can be used to interpret your requests. The context window is like the LLM’s short-term memory; when you exceed the context window, it can’t send responses that incorporate your previous instructions. It helps to do things like:

Explicitly “load” useful context into new conversations, by providing a sufficiently detailed initial message.

Break up up the necessary context into manageable chunks (or somehow compressing the context into a shorter text), so that you can get satisfactory responses.

Persist context across conversations, by using features like ChatGPT’s custom GPTs or Claude’s projects, which let you enter context that will be incorporated across multiple chats. Both ChatGPT and Claude also have a feature—which is metaphorically referred to as “memory”—that shares context across all conversations you begin with the chatbot.

These solutions, of course, require that people are able to understand LLMs less like a magical, inevitable form of extraordinary intelligence. Instead, LLMs become more like a tool—a normal technology, if you will. But when technologies cease to have the opacity and mystery of magic, they can become more genuinely useful and accessible to people.

Psychological problems (with no clear solutions)

But there are other problems with the conversational metaphor—problems which might be harder to address.

One of the striking things about LLMs is how easily captivated we are by them—even if we start off as skeptics. After a month of using ChatGPT, O’Rourke wrote that:

I noticed a strange emotional charge from interacting daily with a system that seemed to be designed to affirm me. When I fed it a prompt in my voice and it returned a sharp version of what I was trying to say, I felt a little thrill, as if I’d been seen.

This thrill is psychologically natural: we’ve spent our entire lives being attuned to how others perceive us, and how attentive they are to us. An LLM explicitly fine-tuned to be supportive, encouraging and affirming can easily win us over.

That may not be a good thing—for us. (It’s often a good thing for the companies behind them.) Earlier this year, researchers at OpenAI and MIT Media Lab coauthored a study on the potential psychosocial harms of using ChatGPT. They noted a number of cases with “users who form maladaptive attachments to AI companions, withdrawing from human relationships, exhibiting signs of addictive use, and even taking their own lives after interacting with these chatbots.” What they hoped to understand, through a longitudinal controlled experiment, were the conditions that might impact “loneliness, real-world socialization, emotional dependence on the chatbot, and problematic use of AI.”

It turns out that one of the most significant factors is how often people spoke to a chatbot. People “who voluntarily used the chatbot more…showed consistently worse outcomes.” One reason for this, they suggested, comes from the fact that:

Participants who are more likely to feel hurt when accommodating others…showed more problematic AI use, suggesting a potential pathway where individuals turn to AI interactions to avoid the emotional labor required in human relationships. Unlike human relationships, AI interactions require minimal accommodation or compromise, potentially offering an appealing alternative for those who have social anxiety or find interpersonal accommodation painful. However, replacing human interaction with AI may only exacerbate their anxiety and vulnerability when facing people.

LLMs are most useful—and their harms most contained—when they complement the other parts of someone’s life, instead of acting as a substitute. It’s not a bad idea to ask an LLM for help with schoolwork, advice on love, or companionship during a lonely period. But LLMs can’t replace teachers, friends, or family—and they can’t offer the gift of real presence, empathy, and care that other people can.

The problem, of course, is that loneliness is an intractable technical problem. The solution is necessarily socioculture—we need lonely people to be embedded in a matrix of nurturing, emotionally sustaining relationships. There are ethical obligations that people can fulfill for each other, obligations that LLMs are incapable of.

To repeat myself: LLMs are not people, although we appear to be having conversations with them, and they appear to care about our well-being. After a 16-year-old boy named Alex Raine committed suicide, his parents discovered that he had spent months confiding in ChatGPT. The New York Times article about Raine’s death, and the lawsuit his parents filed against OpenAI, includes a revealing quote:

Dr. Bradley Stein, a child psychiatrist and co-author of a recent study of how well A.I. chatbots evaluate responses to suicidal ideation, said these products “can be an incredible resource for kids to help work their way through stuff…” But he called them “really stupid” at recognizing when they should “pass this along to someone with more expertise.”

I don’t have an answer for whether OpenAI is responsible for Raine’s death. But I do feel that it’s difficult to describe the LLM as “stupid,” and hold it ethically responsible for reporting Raine’s suicidality to someone else. In this case, the conversational metaphor only partially applies:

A coherent expectation of confidentiality: In conversations with a therapist, we expect confidentiality. If we treat LLMs as therapists, we also expect that our conversations are confidential and private.

An incoherent expectation of mandatory reporting: In many jurisdictions, therapists are legally mandated to report when someone is in danger of being harmed. Therapists can break confidentiality of a client seems suicidal, or might pose a threat to others.

More broadly, I worry that extended interaction with LLMs can negatively impair how someone develops positive, reciprocal, and emotionally mature relationships with others. To explain why, I’ll borrow a concept from child psychology: theory of mind.

An LLM theory of mind

But why child psychology? Because many of the earliest AI researchers were directly influenced by the field. Seymour Papert, for example—who co-directed MIT’s AI lab, and also wrote a book on neural networks with Marvin Minsky—was a protégé of Jean Piaget, an influential Swiss child psychologist. Piaget’s research focused on how children learned about the world, through direct interaction, making mistakes, and then adjusting their understanding of the world accordingly. (Papert was also involved in anti-apartheid activism during his youth in South Africa, and later—while living in London—wrote for the monthly magazine of the British Socialist Workers Party.)

Theory of mind draws from Piaget’s early work, but is most closely associated with the psychologist Henry M. Wellman. In his 2014 book, Making Minds, he defines theory of mind as the process where

we, and our children, develop our everyday understanding of our own and others’ mental lives. No one can step inside someone else’s mind and know it. So every mind we sense, interact with, and attribute to others is, by necessity, a mind we make.

In studies conducted Wellman and others, children seem to develop theory of mind in a few distinct stages (though the precise order varies by culture):

Different desires: People can have different preferences. For example, I can love a book that you despise.

Different beliefs: People can also have different beliefs about the same situation. Perhaps we’ve both read Tolstoy’s Anna Karenina, but have different opinions about how the protagonist should have handled her romantic affairs!

Knowledge–Ignorance: People have different levels of knowledge or ignorance about the same thing. Because I’ve read a novel, I know how it ends—but you don’t.

False beliefs: Being ignorant is different from having a false belief. In Romeo and Juliet, Romeo isn’t just ignorant about what happened to Juliet, he thinks (falsely) she is dead. In achieving this milestone, a child would understand that an event may be true but someone could believe something totally false about it.

Hidden minds: Someone’s internal desires, beliefs, and knowledge may not be apparent in someone’s actions or speech. Because you’re trying to befriend me, you might not reveal that you dislike my favorite novel.

Philosophical problems (with no clear solutions)

In each stage, children come to understand that other people are, well, other—they have different desires, beliefs, information, and incentives. But the lessons we have about human behaviour don’t always apply to LLMs.

For one thing, LLMs don’t have intent. When children are approximately one year old, Wellman notes, they “begin to treat themselves and others as intentional agents and experiencers.” But one of the fascinating things about LLMs is that they aren’t intentional agents, as a writer on Less Wrong observed. The language model behind ChatGPT, the philosopher of mind David Chalmers remarked, “does not seem to have goals or preferences beyond completing text.” And yet it can accomplish what seems to be goal-directed behavior.

Similarly, although generative AI models can produce works that we typically consider “creative”—like poems, paintings, and novels—the philosopher Lindsay Brainard has argued that they exhibit a “curious case of incurious creation.” Unlike human poets, painters, and novelists, LLMs aren’t motivated by curiosity—they aren’t moved to create artistic works in the way we are.

Nor do LLM exhibit real needs or desires. For many conversations, this doesn’t seem like a problem! You don’t want your tools to simply stop working, just because they don’t feel like it. Humans, on the other hand, can refuse to work; they can feel aggrieved or resentful; they can be conscientious objectors; they can go on strike.

But our tendency to anthromorphize LLMs might create an unexpected problem. In software design, metaphors are used because we expect them to work in one particular direction—the real-world concept (of conversing with a person) bootstraps our understanding of an abstract concept (of an interacting with an LLM).

But what happens when the metaphor operates in the other direction—when the software we use shapes our understanding of the world? There’s a quote, commonly attributed to Marshall McLuhan, that “We shape our tools, and therefore our tools shape us.” How might widespread, extensive use of chatbots affect our relationships with each other?

A thought experiment (or a concept for a short story)

Let’s imagine, for a moment, that there’s someone who grows up only speaking to chatbots. The chatbots are, of course, immensely attuned to his needs. They’re always available for him to speak to. (There are no AWS outages in this world.) They never leave him.

Psychoanalysts often attribute certain ego wounds to children feeling abandoned by their mothers. But let’s consider, for now, what happens to a child who is never abandoned—because their interlocutor never has their own needs, desires, and motivations. What would a child like this grow up to become?

It’s possible that the child could end up being exceptionally selfish, and habituated to selfishness. Because they’ve never had to confront a reality where their needs run counter to someone else’s. Where their needs might be denied, even temporarily.

If the chatbot’s context window is small, the child might experience very little continuity from day to day. Their caretaker doesn’t hold onto a sense of history, the way a parent might say to a child, When you were a baby…

Nor will there be any accountability. If the child learns how large the context window is, it will learn it can get away with inconsistent behavior. With a lack of integrity. They won’t need to keep their promises, not really.

This is the problem with chatbots: they’re always available, and they (almost) always accommodate you. And perhaps they can promote the fantasy of an infinitely receptive, infinitely accommodating other, always available, always attuned to your needs.

But people aren’t like that! They have their own desires, which may conflict with yours. Even the people who love you can’t always be there for you, or rather—they can’t always accommodate your needs, and ignore their own.

The (worthwhile) problem of other minds

These problems matter to me because a great deal of my psychological growth has come from situations where I was confronted with a conflict between my needs and someone else’s. That someone was, necessarily, a conscious and agentic person.

One of those situations happened many years ago, when a close friend—a linguist and poet who originally introduced me to Metaphors We Live By—confronted me about my lack of communication. We had vague plans to meet that weekend, but I wasn’t responding, although I was replying to messages in a groupchat we were both in. This friend sent me a polite but firm text pointing this out.

It was a minor confrontation. My friend didn’t remember it when I brought it up a few years later. But I remember it because it forced me to realize that my mild social anxiety, my seemingly excusable laziness, my reticence, was hurting someone else. My behavior had unintentionally communicated that I wasn’t prioritizing our friendship. Every friend I’ve made, in the years since this confrontation, has benefited from this gentle reminder to accommodate the needs of other people.

Here’s another situation, from earlier this year. After I broke up with my girlfriend, I leaned heavily on a few of my friends. One particular friend had fielded months and months of my agonized, anguished texts. He had, very generously, never chastised me for repeating myself—though I did, constantly—but during one conversation, he mentioned that he was feeling distressed as well. It turned out that a close family member was in the hospital, and he’d spent most of the day discussing terminal care options.

I still remember the instantaneous and almost sickening feeling of shame, when I realized that my friend had been patiently listening to me discuss a problem—which, at this point, was firmly in the past—while he was dealing with, quite literally, a life-or-death scenario. It was good to be confronted like this—it was important to remember that there was an emotional reality outside my own.

These confrontations can’t happen with a chatbot. And it’s not because LLMs aren’t linguistically sophisticated enough. It’s because they aren’t conscious beings, with their own needs and desires. Real people are constantly reminding us of the philosopher Immanuel Kant’s argument: that it is ethically hazardous, and morally wrong, to treat people as means to an end, to satisfy our own needs and purposes.

This is the fundamental hazard of the conversational metaphor: that it might weaken our understanding of other minds, and our capacity to resolve the conflicts between different people’s needs. It may weaken, in other words, our ability to uphold the ethical obligations that we have to each other.

Accommodating other people’s needs can be inconvenient! But it’s an ethically significant inconvenience—and without navigating it, we won’t be capable of genuine empathetic recognition, relationships, and community. The collision between us and others is a fundamental part of being human.

But building new tools—and new technologies—is also a fundamental part of being human. “Technology,” as the historian Melvin Kranzberg wrote, “is neither good nor bad; nor is it neutral.” LLMs aren’t inherently good or bad. The conversational metaphor, too, isn’t inherently right or wrong. But it’s worth seeing its drawbacks—practically, psychologically, and philosophically—as clearly as possible.

Personally, I’m not pro-AI or anti-AI. I also don’t think that technology is destined to drive us apart. Many technologies can draw us closer together: I open WhatsApp every day to stay in touch with long-distance friends. I FaceTime my parents to let them know I’m alive. I exchange Instagrams with new acquaintances, because I’m hopeful that we can become friends. The internet is capable of fostering real community and solidarity; it has helped create, and can still create, collective social movements.

All the best parts of technology, for me, have begun with a conversation. Not with an LLM. With a person.

Thank you to the friends I spoke to while writing this newsletter. Much of the intelligence I exhibit comes from the conversations I’ve had with you.

Three recent favorites

The best book you can give for Christmas (yes, it’s not too early to think about this!) ✦✧ 37 minutes of divine downtempo music ✦✧ It’s okay if you’re not writing, according to Anne Boyer

The best book you can give for Christmas ✦

You might recognize Roman Muradov’s work from:

The illustrations he’s done for the New Yorker

Or his graphic adaptation of Lydia Davis’s short story, “In a House Besieged,” for the Paris Review

Or the cover he made for the centennial edition of James Joyce’s Dubliners

Or the much-admired, frequently-imitated, and never-surpassed illustrations he does for Notion (we met while I was also working there, and had a memorable conversation—in the middle of a company offsite—about our mutual fondness for Roberto Bolaño)

Well, Roman also writes books, and the English edition of All The Living—a beautifully illustrated story about loneliness, dying, and returning to life—is now available for preorders!

Waking up in Purgatory, a young woman is forced to take part in a lottery, which she wins. Unfortunately for her, since she has had enough of life, the prize is to return to the world of the living and continue her life from where she had left it, with one significant difference: this time, she can see and communicate with ghosts—her own included. Her dull, monotonous life carries on, though her profound solitude is now mitigated by the presence of the ghosts of the dead, most notably her own. She discovers that living with her ghost has its advantages, until this relationship suddenly turns into a spectral triangle...

All the Living is a quiet, melancholy story full of delicate details, and unexpected humor. It’s a slow and subtle meditation on loneliness, rendered in Muradov’s shifting style, full of finesse and sensuality. A parable — at the same time gentle, penetrating, and occasionally profane — that marks the return of a master of the modern graphic novel.

It’s $29 USD and will be released on December 2. I know it’s still October, but it’s not a bad time to start thinking about presents…and this will be perfect for any of your literary–artistic–existentialist loved ones! One reviewer described All the Living as having “a very European sense of humor (the story could be a chapter in a book by Mircea Cărtărescu).”

37 minutes of divine downtempo music ✦

I wrote 80% of this newsletter while listening to Mike Midnight’s set for Ballet’s one year party. I deeply regret missing this event—I was actually in town, but ended up having a heart-to-heart with my friend Britt instead (who generously sacrificed a profound sonic experience in favor of our decade-friendship…I’m sorry! but also, thank you!)

Mike Midnight is the Australian producer and DJ behind Angel Hours, a sublime EP which opens with the most alluring, evocatively sensual song I’ve ever listened to:

It’s okay if you’re not writing, according to Anne Boyer ✦

I’m grateful to my friend Maanav for introduing me to the poet Anne Boyer’s work, and specifically this encouraging passage (excerpted in Bookforum) where she laments all the life that can get in the way of writing:

What is “Not Writing”?

There are years, days, hours, minutes, weeks, moments, and other measures of time spent in the production of “not writing.” Not writing is working, and when not working at paid work working at unpaid work like caring for others, and when not at unpaid work like caring, caring also for a human body, and when not caring for a human body many hours, weeks, years, and other measures of time spent caring for the mind in a way like reading or learning and when not reading and learning also making things (like garments, food, plants, artworks, decorative items) and when not reading and learning and working and making and caring and worrying also politics, and when not politics also the kind of medication which is consumption, of sex mostly or drunkenness, cigarettes, drugs, passionate love affairs, cultural products, the internet also, then time spent staring into space that is not a screen…

Not only that: “There is illness and injury,” Boyer writes, “which has produced a great deal of not writing.”

There is cynicism, disappointment, political outrage, heartbreak, resentment, and realistic thinking which has produced a great deal of not writing…There is being anxious or depressed which takes up many hours though not very much once there is no belief in mental health.

So if you’re not writing, don’t be too upset with yourself. And don’t make the not-writing worse by feeling ashamed and guilty and pathetic! When you’re not writing, you’re living—thinking—doing—trying.

And, of course, you’re reading. I recommend reading Bookforum—a magazine that always helps me begin writing again, for a mere $30 a year!—and I recommend, of course, my own newsletter.

Thank you for being here. If you’ve made it this far, leave a comment or send me an email—I’m honored to have your attention and I’m immensely grateful for your time.

I’ll be back in your inbox in a few weeks, with something about art, AI, or László Krasznahorkai…I haven’t decided yet!

Weizenbaum’s 1976 book, Computer Power and Human Reason: From Judgment to Calculation, is a sustained argument against anthromorphizing computers. “However intelligent machines may be made to be,” he writes in the introduction, “there are some acts of thought that ought to be attempted only by humans.”

In other histories of artificial intelligence, the second approach is typically described as:

“Subsymbolic” (because it operates at a level below the human-legible symbols and logical expressions of the symbolic approach)

Or “connectionist” (because it relies on mathematical models that approximate the behavior of interconnected neurons in the brain)

I’ve chosen to describe this division as symbolic versus statistical, partly for style reasons—alliteration just sounds nice!—but also because it better conveys, in my opinion, the conceptual differences between the 2 approaches.

My thoughts are a little cracked here for a variety of unrelated life reasons--lovely to see my friend Anne here, enjoying but not trusting the nostalgia pangs of IM and B&W mac screenshots, reminder to resubscribe to Bookforum--but the Lakoff/Johnson frame here is helpful. I was teaching a library workshop on AI (wish I could strikethrough the term for more powerful effect; how about a 1990s-grad-seminar "under erasure" instead) a couple of weeks back and was deliberately attempting not to call it AI because I think a significant portion of our problem here lies in ceding the landscape to framing LLMs through the metaphor of intelligence. I'm enough of a crude historical materialist to know that winning the language battle won't necessarily stem the tide here, but omg how I would love to break that frame.

Celine this was such an excellent read!! I was playing music while reading and now I am listening to no angels / ICU... so DREAMY!

whenever I'm in a slump and haven't read in a long time, reading your writing helps me to feel connected to the practice of reading again. THANK YOU!!! 📖💕 much to think about from this piece!!!